Revealed – the capitalist network that runs the world

By wmw_admin on October 21, 2011

Andy Coghlan and Debbie MacKenzie –

New Scientist October 19, 2011

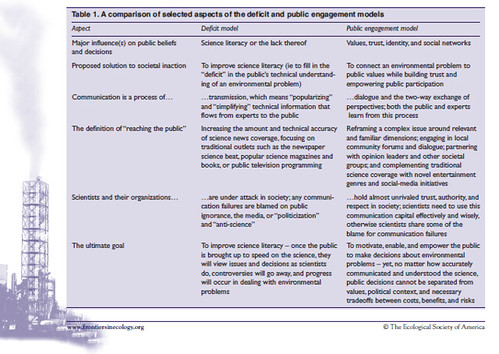

[Caption: The 1318 transnational corporations that form the core of the economy. Superconnected companies are red, very connected companies are yellow. The size of the dot represents revenue.]

AS PROTESTS against financial power sweep the world this week, science may have confirmed the protesters’ worst fears. An analysis of the relationships between 43,000 transnational corporations has identified a relatively small group of companies, mainly banks, with disproportionate power over the global economy.

The study’s assumptions have attracted some criticism, but complex systems analysts contacted by New Scientist say it is a unique effort to untangle control in the global economy. Pushing the analysis further, they say, could help to identify ways of making global capitalism more stable.

The idea that a few bankers control a large chunk of the global economy might not seem like news to New York’s Occupy Wall Street movement and protesters elsewhere (see photo). But the study, by a trio of complex systems theorists at the Swiss Federal Institute of Technology in Zurich, is the first to go beyond ideology to empirically identify such a network of power. It combines the mathematics long used to model natural systems with comprehensive corporate data to map ownership among the world’s transnational corporations (TNCs).

“Reality is so complex, we must move away from dogma, whether it’s conspiracy theories or free-market,” says James Glattfelder. “Our analysis is reality-based.”

Previous studies have found that a few TNCs own large chunks of the world’s economy, but they included only a limited number of companies and omitted indirect ownerships, so could not say how this affected the global economy – whether it made it more or less stable, for instance.

The Zurich team can. From Orbis 2007, a database listing 37 million companies and investors worldwide, they pulled out all 43,060 TNCs and the share ownerships linking them. Then they constructed a model of which companies controlled others through shareholding networks, coupled with each company’s operating revenues, to map the structure of economic power.

The work, to be published in PloS One, revealed a core of 1318 companies with interlocking ownerships (see image). Each of the 1318 had ties to two or more other companies, and on average they were connected to 20. What’s more, although they represented 20 per cent of global operating revenues, the 1318 appeared to collectively own through their shares the majority of the world’s large blue chip and manufacturing firms – the “real” economy – representing a further 60 per cent of global revenues.

When the team further untangled the web of ownership,

it found much of it tracked back to a “super-entity” of 147 even more tightly knit companies – all of their ownership was held by other members of the super-entity – that controlled 40 per cent of the total wealth in the network. “In effect, less than 1 per cent of the companies were able to control 40 per cent of the entire network,” says Glattfelder. Most were financial institutions. The top 20 included Barclays Bank, JPMorgan Chase & Co, and The Goldman Sachs Group.

John Driffill of the University of London, a macroeconomics expert, says the value of the analysis is not just to see if a small number of people controls the global economy, but rather its insights into economic stability.

Concentration of power is not good or bad in itself, says the Zurich team, but the core’s tight interconnections could be. As the world learned in 2008, such networks are unstable. “If one [company] suffers distress,” says Glattfelder, “this propagates.”

“It’s disconcerting to see how connected things really are,” agrees George Sugihara of the Scripps Institution of Oceanography in La Jolla, California, a complex systems expert who has advised Deutsche Bank.

Yaneer Bar-Yam, head of the New England Complex Systems Institute (NECSI), warns that the analysis assumes ownership equates to control, which is not always true. Most company shares are held by fund managers who may or may not control what the companies they part-own actually do. The impact of this on the system’s behaviour, he says, requires more analysis.

Crucially, by identifying the architecture of global economic power, the analysis could help make it more stable. By finding the vulnerable aspects of the system, economists can suggest measures to prevent future collapses spreading through the entire economy.

Glattfelder says we may need global anti-trust rules, which now exist only at national level, to limit over-connection among TNCs. Bar-Yam says the analysis suggests one possible solution: firms should be taxed for excess interconnectivity to discourage this risk.

One thing won’t chime with some of the protesters’ claims: the super-entity is unlikely to be the intentional result of a conspiracy to rule the world. “Such structures are common in nature,” says Sugihara.

Newcomers to any network connect preferentially to highly connected members. TNCs buy shares in each other for business reasons, not for world domination.

If connectedness clusters, so does wealth, says Dan Braha of NECSI: in similar models, money flows towards the most highly connected members. The Zurich study, says Sugihara, “is strong evidence that simple rules governing TNCs give rise spontaneously to highly connected groups”. Or as Braha puts it: “The Occupy Wall Street claim that 1 per cent of people have most of the wealth reflects a logical phase of the self-organising economy.”

So, the super-entity may not result from conspiracy. The real question, says the Zurich team, is whether it can exert concerted political power. Driffill feels 147 is too many to sustain collusion. Braha suspects they will compete in the market but act together on common interests. Resisting changes to the network structure may be one such common interest.

The top 50 of the 147 superconnected companies

1. Barclays plc

2. Capital Group Companies Inc

3. FMR Corporation

4. AXA

5. State Street Corporation

6. JP Morgan Chase & Co

7. Legal & General Group plc

8. Vanguard Group Inc

9. UBS AG

10. Merrill Lynch & Co Inc

11. Wellington Management Co LLP

12. Deutsche Bank AG

13. Franklin Resources Inc

14. Credit Suisse Group

15. Walton Enterprises LLC

16. Bank of New York Mellon Corp

17. Natixis

18. Goldman Sachs Group Inc

19. T Rowe Price Group Inc

20. Legg Mason Inc

21. Morgan Stanley

22. Mitsubishi UFJ Financial Group Inc

23. Northern Trust Corporation

24. Société Générale

25. Bank of America Corporation

26. Lloyds TSB Group plc

27. Invesco plc

28. Allianz SE 29. TIAA

30. Old Mutual Public Limited Company

31. Aviva plc

32. Schroders plc

33. Dodge & Cox

34. Lehman Brothers Holdings Inc*

35. Sun Life Financial Inc

36. Standard Life plc

37. CNCE

38. Nomura Holdings Inc

39. The Depository Trust Company

40. Massachusetts Mutual Life Insurance

41. ING Groep NV

42. Brandes Investment Partners LP

43. Unicredito Italiano SPA

44. Deposit Insurance Corporation of Japan

45. Vereniging Aegon

46. BNP Paribas

47. Affiliated Managers Group Inc

48. Resona Holdings Inc

49. Capital Group International Inc

50. China Petrochemical Group Company

* Lehman still existed in the 2007 dataset used

Source